Software development is undergoing a fundamental structural shift. The adoption of Generative AI (GenAI) is no longer limited to enhancing individual features or accelerating isolated tasks; it is reshaping how software is designed, built, operated, and evolved. This transformation marks a shift from AI-integrated systems, in which intelligence is layered onto existing architectures, to AI-native development, in which intelligence is embedded at the core of platforms from the outset.

At the centre of this shift is a redefined Developer Experience (DevEx) and an expanded role for Platform Engineering. Static platforms are giving way to intelligent, adaptive, and self-optimizing ecosystems—systems designed not merely to run software, but to reason, learn, and collaborate with developers.

What is AI-Native Development?

AI-native development refers to software systems designed from the ground up with intelligence as a first-class architectural principle. Rather than treating AI as an add-on or optimization layer, AI-native platforms embed intelligence directly into their core, shaping how the system behaves, evolves, and makes decisions.

Unlike traditional or AI-integrated systems, where models are bolted onto otherwise static architectures, AI-native platforms are inherently dynamic. They are built to:

- Learn continuously through feedback loops across data, usage, and outcomes

- Adapt automatically to changing conditions, workloads, and contexts

- Make context-aware, data-driven decisions in real time

- Optimize workflows and system behavior with minimal human intervention

In AI-native systems, intelligence is not a feature that can be switched on or off. It is the foundation upon which the platform is designed, operated, and improved. The system does not merely execute predefined logic; it observes, reasons, and evolves as part of its normal operation.

Redefining Developer Experience (DevEx) with GenAI

Generative AI acts as a powerful catalyst that fundamentally reshapes the day-to-day experience of developers. It transforms platforms from passive collections of tools and pipelines into active, intelligent collaborators that participate across the entire software development lifecycle. Instead of merely responding to predefined commands, AI-native platforms interpret intent, anticipate needs, and provide guidance proactively—dramatically accelerating delivery while reducing friction.

1. The Platform as an Intelligent Partner

One of the most significant shifts introduced by GenAI is the near elimination of cognitive overhead associated with modern software development. Developers no longer need to memorize complex CLI commands, navigate fragmented documentation, or manually orchestrate infrastructure, security, and observability workflows across multiple tools.

Through natural language interaction, developers can express intent, such as:

“Provision a secure staging environment with observability enabled.”

The AI-powered platform understands this request within the context of organizational standards, existing infrastructure, and application requirements. It translates intent into concrete technical actions—selecting approved templates, configuring security controls, provisioning resources, enabling monitoring, and wiring dependencies automatically.

By abstracting away low-level complexity, the platform significantly reduces mental load and decision fatigue. Developers are freed from repetitive operational tasks, allowing them to focus on higher-value work, such as system design, business logic, and innovation. In this model, the platform evolves into a trusted intelligent partner, handling execution details while developers retain ownership of intent, architecture, and outcomes.

2. Omnipresent Expertise and Proactive Assistance

In AI-native platforms, GenAI functions as an ever-present domain expert embedded directly into the developer workflow. This intelligence is not confined to a separate tool or review phase; it operates continuously across coding, testing, deployment, and runtime operations. As developers work, the platform observes context—code changes, configuration choices, dependency graphs, runtime signals—and provides guidance precisely when and where it is needed.

This embedded expertise delivers real-time, contextual assistance across critical dimensions, including:

- Dependency and configuration errors before they propagate downstream

- Security vulnerabilities identified during design and implementation, not post-deployment

- Performance bottlenecks inferred from both code structure and runtime behavior

- Architectural anti-patterns that may impact scalability, reliability, or maintainability

Rather than reacting after failures occur, the platform proactively identifies emerging risks and optimization opportunities. It surfaces recommendations aligned with internal standards and proven best practices, ensuring consistency across teams. In more advanced scenarios, the platform can autonomously generate code patches, refactor problematic components, or scaffold new modules from high-level descriptions. Problem-solving shifts from reactive debugging to continuous, proactive optimization—reducing incidents, shortening feedback loops, and improving overall system quality.

3. Enhanced Discoverability and New Interaction Models

AI-native platforms dramatically improve discoverability by ingesting and understanding the full spectrum of platform knowledge—documentation, APIs, service catalogs, architectural guidelines, and organizational standards. This unified understanding removes the traditional friction developers face when navigating fragmented information spread across wikis, repositories, and tribal knowledge.

Developers can interact with the platform using natural language queries such as:

“What’s the recommended path for building a RAG-based service?”

GenAI interprets the question in context and surfaces the most relevant components, templates, workflows, and reference architectures instantly. Importantly, modern interaction models go beyond passive recommendations. With capabilities such as Model Context Protocol (MCP) or similar tool-execution frameworks, these interfaces can take direct action—provisioning services, configuring pipelines, triggering deployments, or wiring dependencies automatically.

This marks a fundamental shift in human–platform interaction. Developers focus on articulating intent and desired outcomes, while the platform determines and executes the optimal implementation path. The result is a new cognitive architecture in which humans define the “what,” and the platform reliably handles the “how.”

4. From Code-Centric to Specification-Centric Development

As natural language and high-level specifications become primary interfaces for programming, the role of the developer evolves fundamentally. Developers transition from being primarily code producers to becoming designers of intelligence, behavior, and constraints.

Instead of writing every line of code manually, developers focus on defining:

- Intent and business objectives

- Functional and non-functional constraints

- Desired outcomes and quality attributes

- High-level workflows and system interactions

The AI-native platform translates these specifications into executable artifacts—generating code, creating tests, deploying services, and continuously optimizing performance and reliability. Repetitive and error-prone tasks are automated by default, while human expertise is applied where it adds the most value.

This collaborative model redefines software creation itself. Teams move faster without sacrificing consistency or quality, architectures remain aligned with best practices, and systems evolve more safely over time. Development becomes less about managing complexity and more about shaping intelligent systems that can adapt and improve continuously.

A Foundational Architectural Distinction

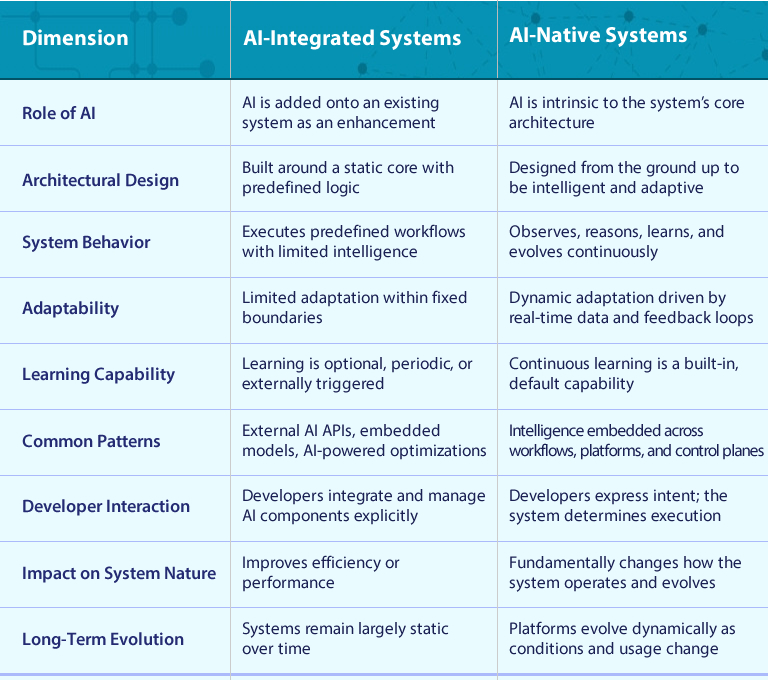

Understanding AI-native development requires a clear distinction from AI-integrated approaches. While both leverage artificial intelligence, they differ fundamentally in architectural intent, system behavior, and long-term impact.

Table: AI-Integrated Systems vs. AI-Native Systems

In short, AI-integrated systems use AI to enhance existing capabilities. AI-native systems are built around intelligence, making adaptation, learning, and optimization core to their existence.

The New Role of Platform Engineering in an AI-Native World

The convergence of Generative AI and Platform Engineering represents a fundamental shift in how platforms are conceived, built, and operated. Platform teams are no longer limited to providing shared infrastructure and tooling; they evolve into architects of intelligent development ecosystems. Their responsibility expands from ensuring scalability and reliability to shaping how intelligence is embedded, governed, and experienced across the organization.

In an AI-native world, platforms do not merely host applications; they actively participate in the creation, optimization, and evolution of software.

1. Internal Developer Platforms as AI Launchpads

Internal Developer Platforms (IDPs) are emerging as the primary launchpads for AI-powered applications. Within an AI-native architecture, IDPs must support the full AI lifecycle while absorbing the operational complexity that would otherwise slow down application teams. This includes managing:

- Model training, fine-tuning, and evaluation workflows

- Scalable, cost-efficient inference pipelines

- GPU, accelerator, and resource scheduling across teams

- Complex data pipelines spanning ingestion, feature engineering, and feedback loops

By centralizing these capabilities, the platform shields application teams from infrastructural and operational concerns. Developers can focus on applying AI to real business problems, while the platform ensures scalability, security, compliance, and operational excellence by default. The IDP becomes a force multiplier, enabling rapid experimentation without sacrificing governance or stability.

2. Self-Service, Abstracted AI Infrastructure

AI-native platforms further redefine platform engineering by offering fully self-service, abstracted AI infrastructure. The traditional friction of MLOps—capacity planning, environment provisioning, dependency management, and deployment orchestration—is encapsulated behind intuitive platform interfaces.

Developers gain instant, on-demand access to:

- CPUs, GPUs, and specialized accelerators

- Storage, networking, and secure data access layers

- Training, experimentation, and inference environments

What previously required weeks of cross-team coordination can now be achieved in minutes. AI infrastructure becomes a fluid, elastic capability—provisioned dynamically based on developer intent and workload characteristics rather than manual configuration. This abstraction enables speed without compromising control, allowing platforms to enforce policies, cost constraints, and security transparently.

3. Codifying Recommended Paths for AI Development

A critical responsibility of platform engineers in an AI-native environment is defining and codifying recommended paths for AI application development. These paths embed organizational standards and best practices directly into platform workflows, reducing ambiguity, risk, and cognitive load for development teams.

Typical elements include:

- Pre-built templates for LLM integration and orchestration

- Standardized architectures for retrieval-augmented generation (RAG)

- Secure and compliant data access patterns

- Reusable models, datasets, and shared AI components

By operationalizing these patterns within the platform, AI development becomes accessible to a much broader set of teams—including those without deep machine learning expertise. Innovation is democratized across the enterprise while maintaining architectural consistency, security, and compliance. Platform engineering thus becomes a key enabler of responsible, scalable AI adoption.

4. Platform as a Product, Intelligence as a Feature

In an AI-native world, platforms must be treated explicitly as products, with clearly defined user experiences, feedback loops, and success metrics. AI is no longer just a consumer of platform services—it becomes a core feature of the platform itself.

This product mindset ensures that platforms are:

- Smarter by design, continuously learning from usage patterns and outcomes

- More intuitive to use through natural language and intent-driven interfaces

- Continuously improving as intelligence refines workflows, recommendations, and automation

Platform teams must carefully balance autonomy and control, ensuring that intelligent behavior aligns with organizational objectives, governance requirements, and trust boundaries. Done well, the platform becomes a living system—one that evolves alongside both developers and the business.

Accelerating the Software Development Lifecycle

The integration of Generative AI into AI-native platforms accelerates the software development lifecycle end to end. By embedding intelligence across planning, development, testing, deployment, and operations, platforms eliminate traditional sources of friction and delay.

Key acceleration mechanisms include:

- Eliminating cognitive overhead through intent-driven, natural language interactions

- Automating repetitive, complex, and error-prone tasks by default

- Removing handoffs and bottlenecks between tools and teams

- Enabling faster, safer, and more predictable delivery cycles

AI-native platforms can generate and optimize code, execute and validate tests, manage deployments, and continuously enforce best practices. Feedback loops ensure that systems learn from runtime behavior and user outcomes, improving reliability and performance over time.

The result is not merely faster delivery, but higher-quality software—built with fewer defects, greater consistency, and stronger alignment to organizational standards. Development shifts from managing complexity to shaping intelligent systems that can adapt, optimize, and evolve continuously.

The Dawn of Intelligent Development Ecosystems

In conclusion, AI-native development is not an incremental upgrade—it is a fundamental shift in how software is designed, built, and evolved. By embedding intelligence at the foundation, platforms move beyond static execution to become adaptive systems that learn, optimize, and respond to change continuously. This redefines both platform architecture and the developer experience, placing intelligence at the core rather than at the edges.

As a result, platform engineers emerge as architects of intelligent ecosystems, and developers become collaborators with AI rather than mere code producers. Software development in this new era is not only faster and more automated—it is inherently smarter, more resilient, and better equipped to handle growing complexity.